Meeting: A Visit from Professor Steve Furber

Professor Steve Furber was one of the people behind Acorn’s early computers. He visited us to talk about a range of topics including the development of the BBC Micro, the history of the ARM processor and how it owed its existence (at least partly) to BBC BASIC, and the research into neural networking that he and his team are currently undertaking at Manchester University.

Report by Ian Macfarlane

As part of the general theme of our 25th Anniversary, February’s meeting saw a visit from Professor Steve Furber of Manchester University. As one of the team behind the early days of Acorn, he was closely involved with the development of the BBC Micro and the ARM processor. Since the start of the 1990s, he has been involved with a number of areas of research at Manchester.

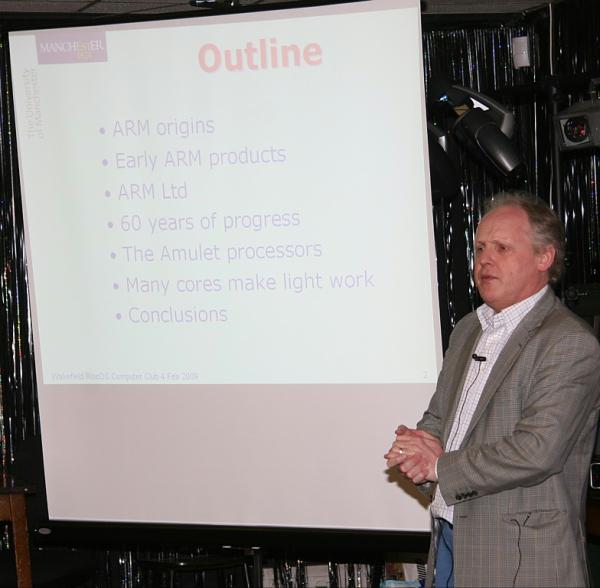

Professor Furber explained that he was not going to talk about RISC OS because when he was at Acorn, he was involved on the hardware side; instead he was about to take us through the history of the ARM processor. His talk would encompass where the ARM came from, its early development, the formation of ARM, the company, and a review of where computing is going to. One of the first computers was built in Manchester in 1948. In the ’90s he was working on the Amulet processors and at the moment he is working on systems for modelling the brain which use very large numbers of ARM processors.

Computer literacy

Acorn was put on the map in 1981 when it won the contract for producing the BBC Micro for the BBC. The initial estimate for the build was for 12,000 machines. In the end over 1.5 million were sold. Education was where the bulk of the machines were sold – in primary and secondary schools and also in university labs to replace the ASCII terminals attached to mainframe computers. Interest was so high that there was a domestic market as well, despite the high price-tag. This caused Acorn to grow exponentially and importantly, the company never learned how to sell anything in the heady days of the early ’80s. The product’s specification was technically ambitious and the design margins were very small, but it was reliable in practice. He showed us a photo of the BBC Micro’s circuit board and he pointed out that it had a very large number of components: there were slots for 102 components on the PCB!

Acorn then thought about replacing the 6502 8-bit processor. The trend was to 16-bit processors such as the Motorola 68000 and the National Semiconductor 32016. They had richer instruction sets, but from Acorn’s view they didn’t deliver. Acorn brought out second processors for the BBC Micro and used the 32016; but it needed three clock cycles to accomplish anything so that a clock of 6MHz only produced 2MHz processing speed. The jump to 16 bits was not buying very much. But worse than that, there were now new and complex instructions which took a very long time to finish, for example the divide instruction which took 360 clock cycles to complete. During this period the processor was non-interruptable, so that there was a worst-case interrupt latency of at least 60 microseconds. Interrupts were used a lot on the BBC Micro, including one per byte transferred between processor and disc. The interrupt latency meant that data transfer was pegged at one byte every 60 microseconds, and increase in performance was stalled if these chips were to be employed.

Herman Hauser, the technical director, decided that Acorn should be designing its own chips, and design tools were obtained from VLSI Technology along with two (British) chip (but not micro-processor) designers. Steve and Sophie Wilson were sent over to the US to talk to the school leavers who were designing the successor to the 6502 – in a small bungalow in the outskirts of Phoenix! Steve and Sophie convinced themselves that they could design chips just as well. Very soon there was the sketch of a very simple microprocessor, in 1983. The final product was always to be a desktop computer. The result was a four-chip design with the ARM (Acorn RISC Machine) processor being the central one and using the idea from Berkley, California that processors should be much simpler than the developing trend of CISC processors. The Berkley RISC 1 had out-performed any of these CISC processors. Acorn used the Berkley ideas and the Acorn requirements to come up with a design for the new Acorn desktop (commercial) computer.

Early progress

The main design criterion for the ARM was the interface with memory, as this seemed to be the bottleneck in all designs. Memory was DRAM running at 4MHz. If the processor was 32 bits, then twice as much information could be sucked out of memory in the same time. Also DRAM had a fast access facility for sequential reads (twice as fast), which Acorn used throughout its design, such that there was an enormous increase in speed (25Mbytes/s as opposed to 4Mbytes/s). Today it is done with cache memory. Sophie designed the instruction set while Steve designed the hardware necessary to achieve it, and wrote the reference model. This model was written in BBC Basic, and was 808 lines of code in length – which included the test environment. Steve recently found this and his notes, and asked ARM if he could put these on a website for historical purposes. ARM said “No” flatly – which drew chuckles from the audience.

Acorn did not put much resource into the design, for it felt sure that the large microprocessor manufacturers would bring out superior chips which Acorn could use in its desktop computer, but at the end of the eighteen months design phase Acorn found it had its own chip ready for the new product. Herman considered the two advantages of the new chip relative to its competitors. They were: no people and no money! Steve of course went on to explain this apparent anomaly: this state of affairs, no people and no money, brought great pressure to do the right thing. Every decision in the design of the new product was taken in favour of simplicity, because otherwise it would never be finished and it would never work. The simplicity pressure was very strong. Everything was designed on BBC Micros because the money wasn’t available to buy in any tools, apart from the VLSI kit.

At the end of the eighteen months Acorn had the design of the chip, which was hand crafted and so very unlike modern designs which are almost totally designed by computer. The first ARM was in 3 micron CMOS, on two-layer metal, with 25,000 transistors, covered 65 square millimetres, ran at 6MHz and came in at under a tenth of a watt. The energy dissipation was necessarily low because the chip had to be encapsulated in plastic, not ceramic, for cheapness. This lack of energy consumption wasn’t important for Acorn’s products, but was for the future of ARM. Two-layer metal was still experimental at this time but permitted much more economic chip design. The architecture worked very nicely with 26-bit addressing. “When this was extended to 32-bit addressing for Apple, the architecture was muddied a bit”, Steve said.

Finished products

The first processor to go into a product was the ARM2, which was a shrink-down on the original processor and consequently ran faster at 8MHz. This had been done whilst Acorn waited for the other chips of the set, the memory controller, MEMC, the video controller, VIDC and the Interface controller, IOC, to be completed. This chip set went into the Archimedes A310 (1987) through to the A3000 (1992). In 1989 a cache was added, the processor was shrunk again to run at 20MHz, and it became the ARM3. New products were brought out with this chip but with the same VIDC, MEMC and IOC chips. The cache memory made a lot of difference, especially to high resolution screen modes, since the cache absorbed much of the processor’s bandwidth requirements. By 1990 the ARM company was formed. The ARM250 was the original four chips pushed onto one piece of silicon. The ARM7500 moved on to more sophisticated technology with floating point arithmetic.

Steve explained about how the ARM company came into existence. He had spent much of his time in 1989 and 1990 working on how to move the hardware design team out of Acorn and to be funded on an independent basis. However the numbers in his business plans just did not add up. The royalties on each processor were so small that millions of these processors would have to be produced in order for the business to be viable. Steve left Acorn for Manchester in August 1990. Two weeks later Apple came knocking on Acorn’s door: they were interested in using ARM for their Newton product, but they would not do that while it was owned by a competitor. Apple’s idea was to set up ARM as an independent company and, since this is what Acorn wanted too, the company was set up in November 1990.

The Newton as a product did not succeed but it was hugely important for ARM, since during its development ARM publicised the fact that the ARM was the processor going in to the Apple Newton, which boosted ARM’s marketing enormously. This opened many doors for ARM. Although they continued to supply the chips to Acorn for desktop processing, they realised that total systems could now be accommodated on a single piece of silicon. This had huge implications for many different emerging markets. The ARM design used much less of the silicon area than the competition and so more complex systems could be installed on the same piece of silicon. The low power requirements of the ARM design was also an important factor in mobile applications.

Advanced RISC Machines

The ARM company was also very clever with the business model. Robin Saxby was brought in to lead the team, and he created the business model based on licensing. The problem with royalties is that they are very small and always a very long way off. The money comes in only when your customer is producing in volume, which could be typically five years from concept. Saxby’s innovation was to charge people to join the club. Saxby once told Steve that his early algorithm for charging customers was to double the joining fee for each successive customer! For a manufacturer wanting to licence the ARM9 to be built into a wide range of products, the licence fee would be in the order of magnitude of $10 million up front with royalties to follow.

Simplicity was a recurring theme in Steve’s talk. It was always compelling to add sophistication, but that inevitably leads to escalating costs and design slips. ARM did not try to extend the capabilities of the processor itself in the 1990s. Even today the highest selling processor is the ARM7, which is a three stage pipeline. What ARM did was to make it easier to use by dumbing down the techno-speak. They found that the market swelled as a result and this was their greatest achievement. Features were installed in the processor, such as ‘on-chip debug’ and ‘on-chip bus technology’, so that averagely competent engineers could use the processor to design their systems. ‘Process portability’ is another key feature of ARM, such that a design can much more easily be moved from one manufacturer to another. ‘Soft IP’ was yet another facility for selling a customer the source code, which has become ARM’s mainstream business.

As for product volume, by the end of 2007 over 10 billion ARM processors had been made – more than the planet’s population – and they are shipping at 10 million daily! In terms of the computer power that has ever been in existence, there is now more ARM computer power than all the other put together. Intel makes faster processors but far fewer of them: by value, ARM does not compete with Intel. As noted above, the ARM2 processor had 25,000 transistors, whereas the ARM7 has approximately 100,000. So in total, 1015 transistors have been used in 10 billion ARM processors and, by a Douglas Adams-style coincidence, this number is about the same as the number of synapses in the human brain – or that used to be in there somewhere!

Moore’s Law

Steve then recalled the history of computing: it was sixty years old. Gordon Moore, in 1965, observed that the industry was approximately doubling the number of transistors on a circuit board every eighteen months, and he confidently predicted that this trend would continue for ten years. However Steve showed us a graph that showed that Moore’s Law has just kept going to the present day, such that the points on his (logarithmic) graph were surprisingly close to a straight line. Steve joked that it has become the most important planning tool in the industry. Money is spent in research to fulfil the graph’s requirement in five years’ time: it has become a self fulfilling prophesy. He likened this development to a raging inferno, which was limited by the oxygen – in this case cash – that could be sucked in at the bottom to drive the process.

He showed us diagrams of cutaway, three-layer-metal chips, which showed the types of transistors that were being used as gates. Today’s chips, he said, had seven or eight layers of metal and, if it was blown up so that every transistor could be examined as shown in the diagram, then the chip would occupy an area of two square miles and within ten years it will be the size of the Greater Manchester area. If any one of the component parts fails, the whole chip is rejected. Designing a chip is at present like designing the road network of the world, including all footpaths, from first principles.

Rick Sterry asked what the width was of each track of metal. Steve thought that they would be in the order of a quarter of a micron. The chip that he was engaged in designing at present is 130 nanometres in size (an eighth of a micron) but the cutting edge is 45 nanometres. Ian Macfarlane asked what the rejection rate was. Steve said that for up to a centimetre square the yield was 90 to 95% whereas for twice that area, where Intel processors are at the moment, the yield would drop appreciably. The tracks are drawn using light at the blue end of the spectrum, whose wavelength is 390 nanometres. Ten years ago this was believed to be impossible, but, by using defraction grating technology, it has been made possible to work down to a third of the wavelength of light. Steve was not able to tell us how they have achieved 45 nanometre tracking.

What you can do with this is impressive, he said and he went on to consider the memory stick that we are familiar with. For a card with 12GBytes there must be 96Gbits of information. Since it is possible to charge a transistor at four different levels, two bits of information can be carried by one transistor. So the memory stick has fifty thousand million transistors on it! They are currently selling at £15. At 1950 transistor prices, you would be close to the GDP of the planet. Steve keeps his entire CD collection on his, and that only uses about a third of it. This is completely transforming the way music and film are used.

The Manchester ‘Baby’

He showed us a photo of the working replica of the 1948 ‘Baby’ computer that was built for the fiftieth anniversary, and that now resides in the Manchester Museum of Science and Industry; every Tuesday lunchtime, it is fired up by the enthusiasts. He thought that it was fairly reliable when Rick asked about valve failures. The machine had 32 words of 32-bit memory, and people have devised animated graphics generating programs to run on it. He thought (to much laughter) that it should be essential training for every Microsoft employee!

The ‘Baby’ was about seven feet tall, used 3.5kW and executed 700 instructions a second. He then considered the current design, an ARM9 where the processor occupies half a square millimetre, using 20mW of power and it executes 200 million instructions per second. There are many ways to compare the two, but the way Steve liked was the fuel efficiency of computing. The ‘Baby’ used five joules of power to execute an instruction. The ratio between the two was in the order of 50 thousand million. This number is, by another of Douglas Adams’ chances, the number of litres of fuel that the UK’s road transport uses in a year, so that if car engines had improved by the same ratio in that period then one litre of fuel would keep the UK going for a year. The oil crisis would be beyond the sell-by date of the planet and global warming wouldn’t be worrying us. If the garden snail had sped up by that factor it would now be slithering faster than the speed of light.

Asynchronous and parallel processing

Having looked at the progress made in the field of integrated circuits, Steve then turned to his Manchester career. This began in 1990 when he started building Amulet processors, which were similar to ARMs but didn’t have clocks. Different bits of the chip go at different rates using local handshakes: every time data flows there is a Request / Acknowledge procedure going on to control the flow. This gives a style of logic that is driven by what needs to be done and it only uses the power it needs; it is also more flexible and doesn’t excite resonances in radio circuits and as such is more secure. He talked very briefly about the four chips that had been designed: each was a development of its predecessor, adding more components to the original processor in the same way that the ARM processors had developed, as he had previously described. The Amulet 1 (1994) was the first asynchronous implementation of a commercial microprocessor architecture and was code compatible with the ARM, which meant that it would run RISC OS – but that was never tried. The research showed that it was possible to build an asynchronous processor, but it was worse than the current ARM6 on all counts. Amulet 2 had more of a system to it, having a cache and a better I/O interface. Comparing this to the ARM7 and ARM8 there were some slight advantages beginning to appear, especially in terms of energy, but no real disadvantages.

At this point a German Telecoms firm asked to be permitted to test the Amulet 2 for radio interference. They were impressed by its electro-magnetic performance such that the next chip, the DRACO, was designed in collaboration with them and made it to silicon. Sadly the German firm folded. However the industry had moved into synthesised processors, and that is the direction that Steve’s research is moving now.

Steve talked about the ‘clock race’ that evolved just before the millennium between the two giants, Intel and AMD. Both put great effort into deep pipelining in order to hoist their processor’s clock speed as high as possible, for marketing reasons. Intel threw in the towel in 2003, saying that this idea was going nowhere, and they cancelled the Pentium 5 project. Instead of this, they would put multiple processors on the one chip. This was all very well, except for the problem of how they could be programmed. Steve envisages that a lot of money will be thrown at how to program parallel processors. Once it becomes possible to fully use parallel processors, Steve speculated that the need for CISC processors would disappear, since the ARM processors were so much more energy efficient.

The human brain

Steve said that his current research centred around this problem: of putting multiple processors as a system onto a chip and, in particular, building a very detailed, real-time model of the brain to allow neuro-scientists and physiologists to better understand how the brain works. They have received funding to cover this – to build a machine with a million ARM cores in it. This gives quite a lot of computing power, but represents only about 1% of the human brain. Manchester is collaborating with Southampton University at the moment, and Cambridge and Sheffield universities will be joining in the next round which is sponsored by EPSRC. ARM provide support for this project, supplying processor designs free, and Silistix is a spin-out company that is involved. Each node of the design is represented by two chips. He admitted that the project had gone way beyond the bounds of what could be achieved by a University research group. The design is kept reasonably simple by using both synchronous and asynchronous components. The asynchronous work that they did in the 1990s is feeding very strongly into the project. An important aim of the project is to understand how to build a reliable electronic system from an ever less reliable, large number of components. The components are becoming less reliable as they approach atomic size.

Steve got us to appreciate that we are losing about one neuron per second – more if we had been imbibing – but we don’t really notice any ill effects because the biology knows how to repair and maintain performance. This rate of loss is manageable since it accounts for only 1% of the brain and is normal in adult life. If 10% is lost then problems start to occur.

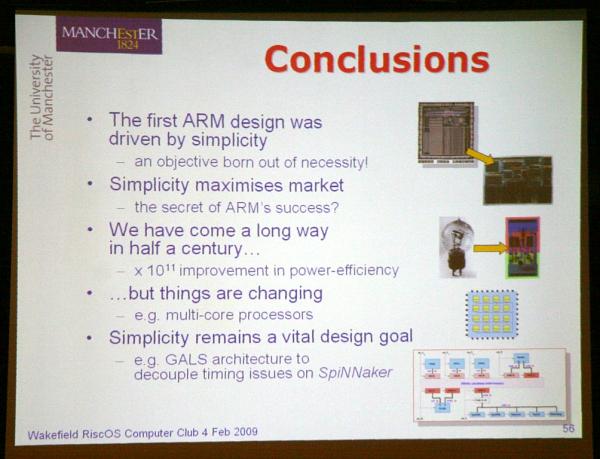

To conclude Steve said simplicity had been a recurrent theme. Lots of simplicity went into the first ARM design. Making things simple to use has maximised ARM’s market. Moore’s Law looks like continuing on its exponential progress, but things are changing structurally with the move to multi-core processors. The consequences of this change are quite hard to envisage. He had the forlorn hope (he said, to audience laughter) that in his current dual-core machine, one core would run Microsoft’s procedures and the other one would look after what concerned him. Simplicity was central to the design of his current project.

Questions from the audience

A member asked about the apparent goal of a single electron transistor – was it sensible? Steve said that they had been demonstrated, and research was progressing at Cambridge. Ultimately a bit of information can be stored if an electron can be held in the right place. The connection and detection of the presence of the electron, would dominate the area and volume used. The wiring to the cell must be shrunk as well.

Ian Macfarlane wanted to know which processor was scheduled to do which bit of the processing and Steve said that was the Holy Grail question. On the SpiNNaker machine most of the processors are doing neural modelling and they are all event driven. The code in these processors are all very small real-time assembly programs. Each incoming event causes a program of twenty or thirty lines of code to execute. This prompted Ian to ask if such a complicated processor was needed. Steve said that initially he thought that the neural modelling would take place in dedicated hardware and the most efficient way is using analogue hardware, but the algorithm is then frozen for ever. Some of the research is in refining these algorithms, and using programmable digital processors enables the algorithm to be changed very easily. Possibly when the algorithms become known, an analogue device could be built; the power requirements of such a device would be reduced by a factor of a thousand. The SpiNNaker machine, representing 1% of the human brain, will use about 50kW of electrical power compared to the 20W that a human brain uses!

Flexible simulations

Andrew Pinder wanted to know how flexible was the SpiNNaker machine. Steve said that all the algorithms were changeable. Andrew continued by asking about changing from speech recognition to picture recognition. Steve answered by saying that the connectivity of the neurons was also programmable so that by specifying both the connectivity and the functioning of the neurons it gave a very versatile and flexible machine. There are several assumptions built into the model. The first is that primary communication is through spikes and a number of other neural models do not use the spiking paradigm for communication, but biology does. Each processor in SpiNNaker has 32Kbytes of memory.

Ian wanted to know if the human brain had its own clock: for instance, did it use the human pulse as a clock? Steve admitted that the principal operation of the human brain was not understood, in particular how information is conveyed around the brain. He was particularly interested in the cortex where most of the high level processing happens, and which is a very regular structure. The same basic structures are used for very different processes throughout the brain, so that there is something universal in the algorithms of the cortex that we don’t understand and seems to be quite important. One of the common properties of the brain is the idea of a topographic map. The outside world is somehow mapped onto the cortex, but it is often non-linear and the way the retina image is mapped is quite distorted, but not scrambled. All the bits of the brain that we understand seem to use these topographical maps as a way of representing information. If a topographical map is being used for natural language, what does it look like? Has a linguist ever thought of language in that way? The engineering instinct is that since it all looks the same, it must be doing the same.

Colin Sutton asked whether all the processors had the same program code. Steve said that they needn’t have the same code, but typically they would have similar code. However each processor had the ability to run a completely different program. Colin wanted to know if a million programs would have to be written. Steve said that a library of functional programs would be established for each of the existing and future models. Each processor would be given the code to model its type of neuron; each neuron can have its own local parameters. It would be very tempting to make each neuron identical, but that is not what is found in biology; biology thrives on diversity. There are only a modest number of types of neuron, but each instance of the type is different from each other instance; so diversity is important.

Derek Baron wanted to know if one of these parameters is the way the connections between neurons is made. The answer was that developmental changes are important and connections between neurons can be made dynamically whilst the model is being run.

Mapping the brain

Ian was concerned that everyone’s brain was different; would the model only represent one human’s brain. Steve said that wet neuro-science was very, very difficult – even what connects to what. The lab experiments only show very small parts of what is happening and the scientists have to postulate what is happening in between. The SpiNNaker machine is there to do small bits to test out these hypotheses. What is needed is a circuit diagram for the whole of a rat’s brain, but that is impossible. It was possible to use statistics to say what was probably connected to what. Steve’s final comment on the question was that although we are all different, we are also very similar.

Ruth Gunstone said that a year ago it was given out that Japanese and American scientists estimated that it would take thirty years to map a complete human brain. Would it then be possible to download a consciousness into a computer? Steve thought that, if they were right in their thinking today, then it would be possible to model the whole of the human brain in twenty to thirty years. That was the general consensus today. Steve said that there were a very large number of very difficult problems to solve. If progress was maintained then it would be possible to do that level of modelling at that time. However questions about consciousness were very difficult to answer. Steve had his own ideas about consciousness. If a full model was operative then some form of consciousness might emerge, if you could work out how to detect it. Steve said that the reason we find artificial intelligence so hard is because we are arrogant about our own intelligence.

Rick commented that it was hard to imagine an isolated consciousness without a sensory connection to the real world. Steve agreed, saying that any artificial intelligence has to have embodiment, but whether it had to be embodiment in the way we understand it is a question; he continued that humanoid robots exist and a European project called the Humanoid Robot Platform exists and a workshop on this topic was to happen the following week in Manchester. The SpiNNaker machine did not have to fit inside the body, since there could be wireless communication between the two or the internet could even be used to carry the signals. The SpiNNaker machine could only go so far without responding to sensory interrupts.

Rick closed the meeting, thanking the audience for coming and noting that they had probably learned lots of little phrases from Steve’s talk, which may prove useful in future parties. We thanked Steve in the usual manner.

Photos

Meeting resources

There are more resources for this meeting if you are a WROCC member: log in to view them.